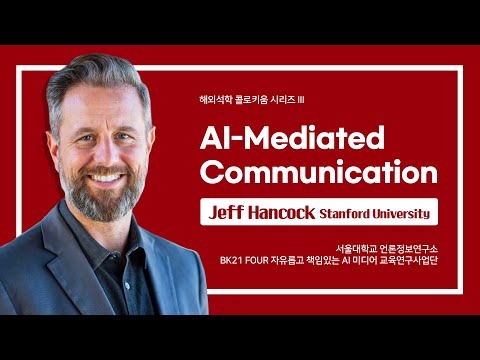

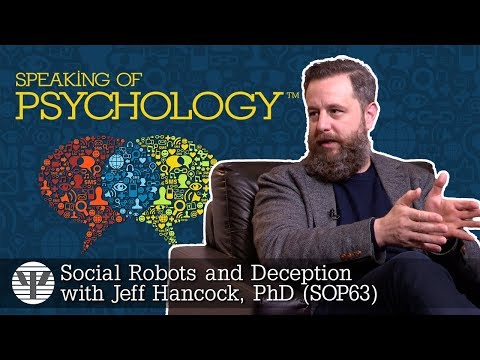

Videos

Learn More About Jeff Hancock

The proliferation of social media in our daily lives has transformed the way we communicate – and it’s influencing everything, from how we deceive and trust one another, to our mental and emotional well-being. Jeff Hancock, Ph.D., is helping individuals and companies alike understand how, and why it matters.

A leading expert in social media behavior and the psychology of online interaction, Dr. Hancock studies the impact of social media and technology on self-esteem and relationships, deception and trust, how we form impressions of others and how we manage others’ impressions of ourselves, and more. His view isn’t that social media is good or bad, but that it amplifies everything – and we must learn to navigate the effects.

Dr. Hancock is a professor in the Department of Communication at Stanford University, co-director of the Stanford Cyber Policy Center, and senior fellow at the Freeman Spogli Institute. He founded Stanford’s Social Media Lab and serves as director of Stanford’s Center for Computational Social Science. Under his leadership, a trailblazing team uses computer algorithms and social experiments to offer new insight into why and how we lie, and the role technology plays in shaping deception and trust.

His TED talk, The Future of Lying, has been viewed more than a million times, and he’s frequently called upon to speak to wide-ranging audiences, including insurance and accounting professionals, IT and security companies, and parent groups. While the context varies, concerns of a post-truth society and the need for expert guidance on how to think about truth and trust in the digital era are in high demand. Dr. Hancock also helps businesses manage reputation and trust issues; lectures on the impact of fake news, misinformation and media manipulation; and even helps people looking for love decode online dating profiles.

Hancock’s now famous Facebook Emotional Contagion study triggered a world-wide conversation about expectations and ethics in the digital age. After soliciting outrage from social media users by intentionally flooding their news feeds with negativity, Hancock’s study made it clear that when companies do not understand people’s simplified, and often misguided, beliefs about the nature of digital platforms – which he calls “folk theories” – the consequences can be disastrous.

An energetic and innovative lecturer, Dr. Hancock uses interactive and real-time analysis tools to involve audiences and measure what’s true or false. His engaging approach has resulted in several prestigious teaching awards, including the Rising Star Faculty Award from Cornell University, the Merrill Presidential Outstanding Educator Award, CALS Young Faculty Teaching Excellence Award and the SUNY Chancellors Award for Excellence in Teaching.

The New York Times, CNN, NPR, CBS and the BBC are among the many mainstream news outlets that seek Dr. Hancock’s expertise in social media and how technology mediates communication. His research has been published in more than 80 journal articles and conference meetings.

Before earning his Ph.D. in psychology from Dalhousie University in Canada, Dr. Hancock served as a customs officer in Canada. Prior to joining the Stanford faculty in 2015, he served as associate professor of cognitive science and communication at Cornell University.

Jeff Hancock is available to advise your organization via virtual and in-person consulting meetings, interactive workshops and customized keynotes through the exclusive representation of Stern Speakers & Advisors, a division of Stern Strategy Group®.

Rewiring How We Think About Truth and Trust in the Tech Era

A new trust framework is emerging – fueled by social, economic and technological forces that will profoundly alter how we trust, not only what we see and read online, but also one another. Many believe technology is the villain – an enabler of lies and deception online – but Jeff Hancock believes the opposite is true: it’s our intuition that is off. And that changes everything.

In today’s collaborative economy and sharing society, Hancock explains why we’re actually trusting one another more, not less. But he also says not to throw out the psychology book just because technology is involved. Hancock will convince you that the real disruption taking place today isn’t technological, it’s psychological. He discusses how principles from psychology and communication intersect deception and trust with technology. He also reveals several key principles that can guide how we think about truth and trust on the internet, and how we – as individuals and as businesses – can rewire the ways we detect lies and better gauge truth.

This shift will lead to new and sometimes counterintuitive approaches to using technology to trust more, not less. The implications are profound and far-reaching – from how we sell products to how we manage people, and from how we love to how we teach and parent.

Tapping the Power of Social Media – and Why It Can Be More Positive Than You Believe

Parents, companies and former social media executives are all raising alarms about the alleged psychological impact of social media, from worries about addiction to creating a new generation of misfit narcissists. Jeff Hancock argues that more than a decade of research strongly suggests that these concerns are misguided, and that they create misplaced anxiety and harm to young people, families and business. Hancock’s insights show, instead, how to leverage social media to enhance the human experience, helping us grow and thrive when we use it well.

Hancock, one of the authors of Facebook’s much-discussed Emotion Study, takes a closer look at how social media can influence our physical, psychological, and emotional well-being, and how it can help, not hinder, performance at work, developing and maintaining relationships, and even those battling with depression, narcissism and addiction. He draws from a huge analysis of every published study conducted on the topic, along with his own lab’s work showing how online interaction can strengthen our self-confidence and perception of emotional support. Social media is controversial, but the fact remains that it is here to stay. Hancock’s advice is for us to calm down and take advantage of several key, concrete strategies that parents and business leaders alike can use to avoid the pitfalls of digital platforms and instead tap into their powers for good.

Understanding Our Understanding: The Folk Theories of Technology

We instinctively understand gravity, and we know its basic laws and implications from a young age. However, most of us have no idea how gravity actually works. Instead we have folk theories, or intuitive understandings, about how complex systems operate. Jeff Hancock argues that we also use folk theories when we engage with modern technology, like Facebook’s newsfeed, which is incredibly complex and incomprehensible for most people but affect billions of business transactions and social behaviors.

The dramatic explosion of the ongoing “Fake News” scandal shows what can happen when tech companies design platforms and products without regard to how average people understand them and when a gap in understanding between Silicon Valley and the wider world persists unaddressed. In this presentation, based on research triggered by the backlash he experienced after altering users’ Facebook news feeds for a social psychology experiment, Hancock explains how the average consumer’s understanding of modern technology is based on assumptions that do not always turn out to be true. In this presentation, Hancock demonstrates how tech companies can design products to be more in line with people’s expectations and suggests how consumers can adopt alternative folk theories that are more realistic about the operations and limits of digital platforms.

Revolutionizing Recruiting: How to Find (and Keep) the Best and Brightest While Weeding Out the Bad Apples

In the digital age, human resources professionals are bombarded daily with applications for positions. As applying for positions has become easier, an increasing number of people do so without much thought, and often lack the required skills or personal attributes. And yet, HR professionals must sift through the endless stream of information and applicants, weeding out the credible from the incredible, the real from the fake, the honest from the dishonest, the competent from the incompetent. And once a bad apple has been hired, the damage to an organization and the drain on HR can be tremendous. In this presentation, Jeff Hancock explains how the burdensome process of hiring, as well as managing employees, can be streamlined with novel ways of measuring behavior and communication patterns from an applicant or employee using their digital trails.

Based on his research of corporate hiring practices and on his extensive research examining psychopaths, Hancock bridges the gap between HR and the social sciences, allowing HR professionals to assess authenticity, credibility, curiosity and performance in a more systematic way. He says that people in HR, and in business in general, must behave more like social scientists, focusing on proven metrics rather than relying on intuition in analyzing the never-ending information stream. Much like the moneyball revolution in sports, Hancock argues that new metrics and data science, instead of gut instincts, will revolutionize hiring. By comparing and contrasting the language used by applicants or employees clearly of different calibers, Hancock’s methods can identify in advance who will be an asset to the business, and who will not. He can also help hiring managers identify who might be a bad actor (like a psychopath) and help manage these individuals once they’ve entered an organization.

Generative AI Are More Truth-Biased Than Humans: A Replication and Extension of Core Truth-Default Theory Principles

(Journal of Language and Social Psychology, December 2023)

Linguistic Markers of Inherently False AI Communication and Intentionally False Human Communication: Evidence From Hotel Reviews

(Journal of Language and Social Psychology, September 2023)

People, Places, And Time: A Large-Scale, Longitudinal Study Of Transformed Avatars And Environmental Context In Group Interaction In The Metaverse

(Journal of Computer-Mediated Communication, December 2022)

Impact Report: Evaluating PEN America's Media Literacy Program

(PEN America & Stanford Social Media Lab, September 2022)

The Algorithmic Crystal: Conceptualizing the Self through Algorithmic Personalization on TikTok

(Association for Computing Machinery, August 2022)

Jury Learning: Integrating Dissenting Voices into Machine Learning Models

(Cornell University, February 2022)

Not All AI are Equal: Exploring the Accessibility of AI-Mediated Communication Technology

(Computers in Human Behavior, August 2021)

Key Considerations for Incorporating Conversational AI in Psychotherapy

(Frontiers in Psychiatry, October 2019)

Experimental Evidence of Massive-Scale Emotional Contagion Through Social Networks

(Proceedings of the National Academy of Sciences of the United States of America, July 22, 2014)

Mirror, Mirror on my Facebook Wall: Effects of Exposure to Facebook on Self-Esteem

(Cyberpsychology, Behavior, and Social Networking, February 17, 2011)

Separating Fact From Fiction: An Examination of Deceptive Self-Presentation in Online Dating Profiles

(Personality and Social Psychology Bulletin, May 9, 2008)

On Lying and Being Lied To: A Linguistic Analysis of Deception in Computer-Mediated Communication

(Discourse Processes, March 28, 2008)

Deception and Design: The Impact of Communication Technology on Lying Behavior

(Association for Computing Machinery, April 2004)